Arm's tiny Cortex-M52 packs AI punch for small devices

Helium tech to end up on $1-$2 SoCs claimed to bring big performance gains for ML workloads

Arm is aiming to infuse AI into connected devices and other low-power hardware with an addition to its Cortex-M line-up of microcontroller core designs.

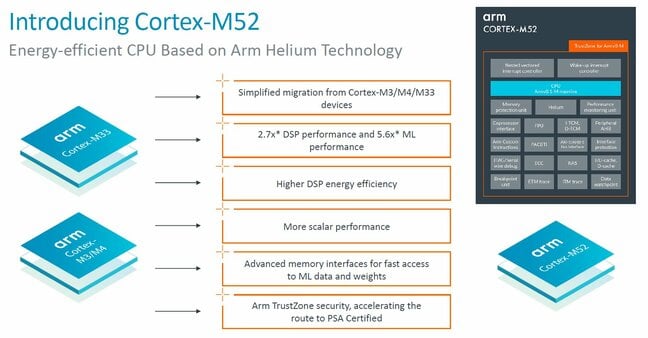

The Brit chip operation has revealed the Cortex-M52, hailed as its smallest and most cost-efficient processor with the Helium vector processing extensions to accelerate machine learning (ML) and digital signal processing (DSP) functions.

Arm is also selling a simplified software development platform to help customers make the most of these features.

Although tools including generative AI have dominated the headlines of late, the kind of AI that Arm has in mind is relatively simple algorithms.

"AI is everywhere. But to realize the potential of AI for IoT, we need to bring machine learning optimized processing to even the smallest and lowest-power endpoint devices," said Paul Williamson, Arm SVP and general manager for its IoT business.

He said AI is critical in harnessing "intelligence" from the vast amount of data being produced and collected with digital devices, and this is being enabled in smaller, cost-sensitive devices that are going to become smarter and more capable.

Arm's Cortex-M products are for the most part single-core 32-bit designs, optimized for small size, low power, and low cost.

Typical functions that Arm expects to see deployed on the Cortex-M52 include vibration or anomaly detection, sensor fusion (merging data from multiple sensors for greater accuracy), and keyword detection using natural language processing (NLP).

Helium, or M-Profile Vector Extensions (MVE), features more than 150 extra scalar and vector instructions, operating with 128-bit wide register size. The Cortex-M55 and Cortex-M85 cores were the first to support this technology, but the Cortex-M52 fits in below these, as the numbering suggests.

Nevertheless, Arm reckons the inclusion of Helium means that DSP operations are 2.7x faster and ML performance is up to 5.6x greater than previous generations such as the Cortex-M33, without the need for a dedicated neural processor (NPU).

Williamson told us that customers should understand that migrating from a previous generation would deliver not just a boost in performance, but also greater energy efficiency and a 23 percent reduction in area.

- Ubuntu for Arm64 laptops (plus RISC kit)

- Arm flexes financial muscles post-IPO, but shares get a reality check

- Arm grabs a slice of Raspberry Pi to sweeten relationship with IoT devs

- Intel CEO Gelsinger dismisses 'pretty insignificant' Arm PC challenge

"We believe Cortex-M52, will be critical in removing barriers to ML adoption, and enabling deployment on the smallest of devices, bringing AI into the reach of everyone," he said.

For developers, Arm said it is bringing support for AI into a unified tool chain to make coding apps simpler for the Cortex-M52.

Citing the example of a predictive maintenance algorithm, Williamson said something like this would typically use DSP functions for signal conditioning and feature extraction, before a neural network is applied to classify events based on machine learning.

"That's where our Helium technology comes in. The developer can code in a single language against a common API, and achieve that performance uplift that they need in both the DSP and ML elements of their application. And there's no need for them to understand the specific hardware, like details of the processor that sits beneath," he claimed.

Williamson added that some of the developers in Arm's ecosystem create tools to help port an ML model that might have been built and trained on a much larger system.

"You may need to quantize that model and bring it down into a much smaller memory footprint and test how it performs in an embedded environment," he said.

Cortex-M52 will also be available on Arm Virtual Hardware, a cloud-based service that enables software development in advance of any actual silicon being ready.

Like other Arm designs, the Cortex-M52 is licensed to chipmakers to integrate into an end product, such as a system-on-chip (SoC). Arm said the first silicon based on Cortex-M52 is expected to arrive in 2024.

These are likely to be chips in the $1 or $2 range for the majority of the volume, Williamson said, "but there is absolutely the potential that we'll see it configured in slightly richer and more capable devices as well." ®