Adobe sells fake AI-generated Israel-Hamas war images – then the news ran them as real

The world needs a timeout moment

Violent AI-generated photos fictionalizing the ongoing deadly Israel-Hamas conflict are not only being sold via Adobe's stock image library, some news publishers are buying and using the pics in online articles as if they were real.

Last year, the Photoshop titan announced it would host and sell people's images produced using machine-learning tools via its Adobe Stock library. Adobe gives those artists a 33 percent cut of the revenue from those pictures, meaning creators could make between 33 cents and $26.40 every time their image is licensed and downloaded.

Generative AI technology has advanced, and become more accessible and easy to use. Anyone can turn to a variety of text-to-image tools to produce realistic-looking images. Some people have now decided to use them to create fake images depicting the war between Israel and Hamas in Gaza, and are selling them on Adobe Stock.

Although these images are labeled as "generated by AI" in the image library, the disclosure is often not carried forward when it is downloaded and posted elsewhere online, including in news articles run by small-time outlets that reportedly did not mark the snaps as synthetic. Hopefully that's not a sign of things to come.

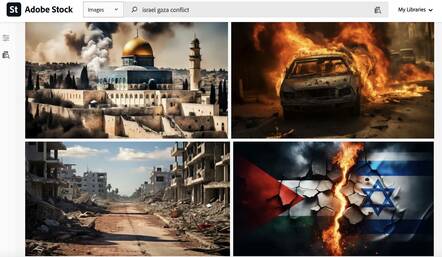

An Adobe Stock image titled "Conflict between Israel and Palestine generative AI," that shows black clouds of smoke billowing from buildings, appears to have been published in numerous internet articles as if it were real, Crikey, an Aussie news title, first reported.

A quick search on Adobe Stock shows similar war-related AI-generated images portraying airstrikes, burning vehicles, and destroyed buildings in Gaza.

- Microsoft, Meta detail plans to fight election disinformation in 2024

- WhatsApp AI happily added guns to chat stickers of Palestinians, but not Israelis

- How 'AI watermarking' system pushed by Microsoft and Adobe will and won't work

- Adobe to sell AI-generated images on its stock photo platform

Experts have repeatedly warned that AI will be used to spread misinformation online, and it is becoming increasingly difficult to tackle the issue as more and more realistic-looking synthetic content is posted and shared on the internet. Several outfits are trying to, in various ways, watermark AI-generated content so netizens can attempt to tell what's real and fake.

Under the Content Authenticity Initiative, tech and journalism orgs including Adobe, Microsoft, the BBC, and the New York Times are attempting to implement and champion Content Credentials, which uses file metadata to highlight the source of an image, be that human or machine-learning software.

Content Credentials, however, hasn't been deployed in practical settings yet, and requires cooperation from social networks, publishers, artists, and application, browser, and generative AI developers to work as hoped.

"Adobe Stock is a marketplace that requires all generative AI content to be labeled as such when submitted for licensing," a spokesperson for the Photoshop giant told PetaPixel.

"These specific images were labeled as generative AI when they were both submitted and made available for license in line with these requirements. We believe it's important for customers to know what Adobe Stock images were created using generative AI tools.

"Adobe is committed to fighting misinformation, and via the Content Authenticity Initiative, we are working with publishers, camera manufacturers and other stakeholders to advance the adoption of Content Credentials, including in our own products. Content Credentials allows people to see vital context about how a piece of digital content was captured, created or edited including whether AI tools were used in the creation or editing of the digital content."

The Register has asked Adobe for further comment. ®